2016 might not have been the best of years, so instead of doing a Christmas card I’ve written a magical fantasy story instead.

A happy Christmas to all, and here’s hoping we have a better 2017.

2016 might not have been the best of years, so instead of doing a Christmas card I’ve written a magical fantasy story instead.

A happy Christmas to all, and here’s hoping we have a better 2017.

Now that everyone’s distracted with the supreme court case on Brexit, you can expect the government to sneak out something it’s ashamed of. Health secretary Jeremy Hunt has decided to ignore the wishes of over a million people who opted out of having their hospital records given to third parties such as drug companies, and the ICO has decided to pretend that the anonymisation mechanisms he says he’ll use instead are sufficient. One gently smoking gun is the fifth bullet in a new webpage here, where the Department of Health claims that when it says the data are anonymous, your wishes will be ignored. The news has been broken in an article in the Health Services Journal (it’s behind a paywall, as a splendid example of transparency) with the Wellcome Trust praising the ICO’s decision not to take action against the Department. We are assured that “the data is seen as crucial for vital research projects”. The exchange of letters with privacy campaigners that led up to this decision can be found here, here, here, here, here, here, and here.

An early portent of this u-turn was reported here in 2014 when officials reckoned that the only way they could still do administrative tasks such as calculating doctors’ bonuses was to just pretend that the data are anonymous even though they know it isn’t really. Then, after the care.data scandal showed that a billion records had been sold to over a thousand purchasers, we reported here how HES data had also been sold and how the minister seemed to have misled parliament about this.

I will be talking about ethics of all this on Thursday. Even if ministers claim that stolen medical records are OK to use, researchers must not act as if this is true; if patients end up trusting doctors as little as we trust politicians, then medical research will be in serious trouble. There is a video of a previous version of this talk here.

Meanwhile, if you’re annoyed that Jeremy Hunt proposes to ignore not just your privacy rights but your express wishes, you can send him a notice under Section 10 of the Data Protection Act forbidding him from disclosing your data. The Department has complied with such notices in the past, albeit with bad grace as they have no automated way to do it. If thousands of people serve such notices, they may finally have to stand up to the drug company lobbyists and write the missing software. For more, see here.

Last week I gave a keynote talk at CCS about DigiTally, a project we’ve been working on to extend mobile payments to areas where the network is intermittent, congested or non-existent.

The Bill and Melinda Gates Foundation called for ways to increase the use of mobile payments, which have been transformative in many less developed countries. We did some research and found that network availability and cost were the two main problems. So how could we do phone payments where there’s no network, with a marginal cost of zero? If people had smartphones you could use some combination of NFC, bluetooth and local wifi, but most of the rural poor in Africa and Asia use simple phones without any extra communications modalities, other than those which the users themselves can provide. So how could you enable people to do phone payments by simple user actions? We were inspired by the prepayment electricity meters I helped develop some twenty years ago; meters conforming to this spec are now used in over 100 countries.

We got a small grant from the Gates Foundation to do a prototype and field trial. We designed a system, Digitally, where Alice can pay Bob by exchanging eight-digit MACs that are generated, and verified, by the SIM cards in their phones. For rapid prototyping we used overlay SIMs (which are already being used in a different phone payment system in Africa). The cryptography is described in a paper we gave at the Security Protocols Workshop this spring.

Last month we took the prototype to Strathmore University in Nairobi to do a field trial involving usability studies in their bookshop, coffee shop and cafeteria. The results were very encouraging and I described them in my talk at CCS (slides). There will be a paper on this study in due course. We’re now looking for partners to do deployment at scale, whether in phone payments or in other apps that need to support value transfer in delay-tolerant networks.

There has been press coverage in the New Scientist, Engadget and Impress (original Japanese version).

In two weeks’ time we’re starting an open course in security economics. I’m teaching this together with Rainer Boehme, Tyler Moore, Michel van Eeten, Carlos Ganan, Sophie van der Zee and David Modic.

Over the past fifteen years, we’ve come to realise that many information security failures arise from poor incentives. If Alice guards a system while Bob pays the cost of failure, things can be expected to go wrong. Security economics is now an important research topic: you can’t design secure systems involving multiple principals if you can’t get the incentives right. And it goes way beyond computer science. Without understanding how incentives play out, you can’t expect to make decent policy on cybercrime, on consumer protection or indeed on protecting critical national infrastructure

We first did the course last year as a paid-for course with EdX. Our agreement with them was that they’d charge for it the first time, to recoup the production costs, and thereafter it would be free.

So here it is as a free course. Spread the word!

At our security group meeting on the 19th August, Sergei Skorobogatov demonstrated a NAND backup attack on an iPhone 5c. I typed in six wrong PINs and it locked; he removed the flash chip (which he’d desoldered and led out to a socket); he erased and restored the changed pages; he put it back in the phone; and I was able to enter a further six wrong PINs.

Sergei has today released a paper describing the attack.

During the recent fight between the FBI and Apple, FBI Director Jim Comey said this kind of attack wouldn’t work.

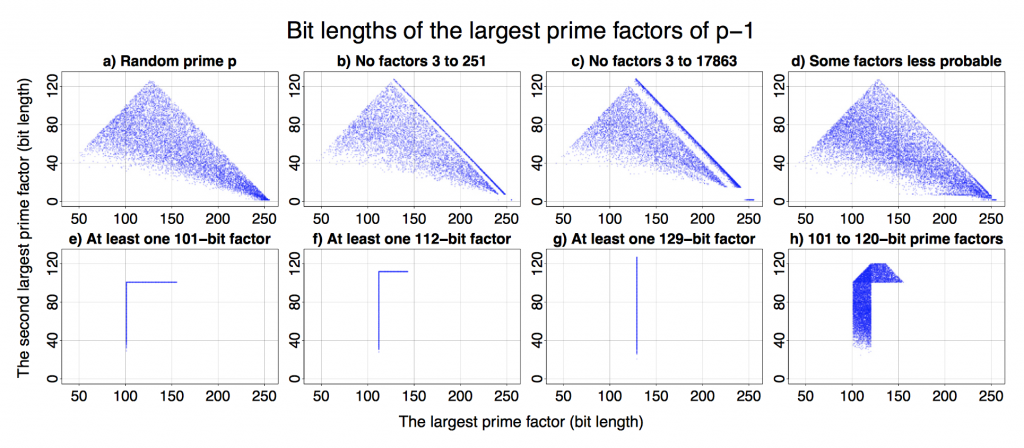

Petr Svenda et al from Masaryk University in Brno won the Best Paper Award at this year’s USENIX Security Symposium with their paper classifying public RSA keys according to their source.

I really like the simplicity of the original assumption. The starting point of the research was that different crypto/RSA libraries use slightly different elimination methods and “cut-off” thresholds to find suitable prime numbers. They thought these differences should be sufficient to detect a particular cryptographic implementation and all that was needed were public keys. Petr et al confirmed this assumption. The best paper award is a well-deserved recognition as I’ve worked with and followed Petr’s activities closely.

The authors created a method for efficient identification of the source (software library or hardware device) of RSA public keys. It resulted in a classification of keys into more than dozen categories. This classification can be used as a fingerprint that decreases the anonymity of users of Tor and other privacy enhancing mailers or operators.

All that is a result of an analysis of over 60 million freshly generated keys from 22 open- and closed-source libraries and from 16 different smart-cards. While the findings are fairly theoretical, they are demonstrated with a series of easy to understand graphs (see above).

I can’t see an easy way to exploit the results for immediate cyber attacks. However, we started looking into practical applications. There are interesting opportunities for enterprise compliance audits, as the classification only requires access to datasets of public keys – often created as a by-product of internal network vulnerability scanning.

An extended version of the paper is available from http://crcs.cz/rsa.

At PETS 2016 we presented a new side-channel attack in our paper Don’t Interrupt Me While I Type: Inferring Text Entered Through Gesture Typing on Android Keyboards. This was part of Laurent Simon‘s thesis, and won him the runner-up to the best student paper award.

We found that software on your smartphone can infer words you type in other apps by monitoring the aggregate number of context switches and the number of hardware interrupts. These are readable by permissionless apps within the virtual procfs filesystem (mounted under /proc). Three previous research groups had found that other files under procfs support side channels. But the files they used contained information about individual apps– e.g. the file /proc/uid_stat/victimapp/tcp_snd contains the number of bytes sent by “victimapp”. These files are no longer readable in the latest Android version.

We found that the “global” files – those that contain aggregate information about the system – also leak. So a curious app can monitor these global files as a user types on the phone and try to work out the words. We looked at smartphone keyboards that support “gesture typing”: a novel input mechanism democratized by SwiftKey, whereby a user drags their finger from letter to letter to enter words.

This work shows once again how difficult it is to prevent side channels: they come up in all sorts of interesting and unexpected ways. Fortunately, we think there is an easy fix: Google should simply disable access to all procfs files, rather than just the files that leak information about individual apps. Meanwhile, if you’re developing apps for privacy or anonymity, you should be aware that these risks exist.

I am at the Privacy Enhancing Technologies Symposium (PETS 2016) in Darmstadt until Friday, and will try to liveblog some of the sessions in followups to this post. (I can’t do them all as there are some parallel sessions.)

When Lying Feels the Right Thing to Do reports three studies we did on what made people less or more likely to submit fraudulent insurance claims. Our first study found that people were more likely to cheat when rejected; the other two showed that rejected claimants were just as likely to cheat when this didn’t lead to financial gain, but that they felt more strongly when there was no money involved.

Our research was conducted as part of a broader research programme to investigate the deterrence of deception; our goal was to understand how to design better websites. However we can’t help wondering whether it might shine some light on the UK’s recent political turmoil. The Brexit campaigners were minorities of both main political parties and their anti-EU rhetoric had been rejected by the political mainstream for years; they had ideological rather than selfish motives. They ran a blatantly deceptive campaign, persisting in obvious untruths but abandoning them promptly after winning the vote. Rejection is not the only known factor in situational deception; it’s known, for example, that people with unmet goals are more likely to cheat than people who are simply doing their best, and that one bad apple can have a cascading effect. But it still makes you think.

The outcome and aftermath of the referendum have left many people feeling rejected, from remain voters through people who will lose financially to foreign residents of the UK. Our research shows that feelings of rejection can increase cheating by 15-30%; perhaps this might have measurable effects in some sectors. How one might disentangle this from the broader effects of diminished social solidarity, and from politicians simply setting a bad example, could be an interesting problems for social scientists.

I’m sitting in the Inaugural Cybercrime Conference of the Cambridge Cloud Cybercrime Centre, and will attempt to liveblog the talks in followups to this post.